This page was generated from notebooks/MarkovBasedMethods.ipynb.

Interactive online version:

Spatially Explicit Markov Methods¶

Author: Serge Rey sjsrey@gmail.com, Wei Kang weikang9009@gmail.com

Introduction¶

This notebook introduces Discrete Markov Chains (DMC) model and its two variants which explicitly incorporate spatial effects. We will demonstrate the usage of these methods by an empirical study for understanding regional income dynamics in the US. The dataset is the per capita incomes observed annually from 1929 to 2009 for the lower 48 US states.

Note that a full execution of this notebook requires pandas, matplotlib and PySAL’s light-weight geovisualization package - splot.

Classic Markov¶

giddy.markov.Markov(self, class_ids, classes=None)

We start with a look at a simple example of classic DMC methods implemented in PySAL’s giddy. A Markov chain may be in one of \(k\) different states/classes at any point in time. These states are exhaustive and mutually exclusive. If one had a time series of remote sensing images used to develop land use classifications, then the states could be defined as the specific land use classes and interest would center on the transitions in and out of different classes for each pixel.

For example, suppose there are 5 pixels, each of which takes on one of 3 states ("a", "b", "c") at 3 consecutive periods:

[1]:

import numpy as np

c = np.array(

[

["b", "a", "c"],

["c", "c", "a"],

["c", "b", "c"],

["a", "a", "b"],

["a", "b", "c"],

]

)

So the first pixel was in state "b" in period 1, state "a" in period 2, and state "c" in period 3. Each pixel’s trajectory (row) owns Markov property, meaning that which state a pixel takes on today is only dependent on its immediate past.

Let’s suppose that all the 5 pixels are governed by the same transition dynamics rule. That is, each trajectory is a realization of a Discrete Markov Chain process. We could pool all the 5 trajectories from which to estimate a transition probability matrix. To do that, we utlize the Markov class in giddy:

[2]:

import warnings

with warnings.catch_warnings():

warnings.simplefilter("ignore")

# ignore NumbaDeprecationWarning: gh-pysal/libpysal#560

import giddy

m = giddy.markov.Markov(c)

The Markov Chain is irreducible and is composed by:

1 Recurrent class (indices):

[0 1 2]

0 Transient classes.

The Markov Chain has 0 absorbing states.

You may turn off the summary for the Markov chain by assigning summary=False when initializing the Markov Chain.

[3]:

m = giddy.markov.Markov(c, summary=False)

In this way, we create a Markov instance - \(m\). Its attribute classes gives 3 unique classes these pixels can take on, which are "a", "b", and "c".

[4]:

print(m.classes)

['a' 'b' 'c']

[5]:

print(len(m.classes))

3

In addition to extracting the unique states as an attribute, our Markov instance will also have the attribute transitions which is a transition matrix counting the number of transitions from one state to another. Since there are 3 unique states, we will have a \((3,3)\) transtion matrix:

[6]:

print(m.transitions)

[[1. 2. 1.]

[1. 0. 2.]

[1. 1. 1.]]

The above transition matrix indicates that of the four pixels that began a transition interval in state "a", 1 remained in that state, 2 transitioned to state "b" and 1 transitioned to state "c". Another attribute p gives the transtion probability matrix which is the transition dynamics rule ubiquitous to all the 5 pixels across the 3 periods. The maximum likehood estimator for each element \(p_{i,j}\) is shown below where \(n_{i,j}\) is the number of transitions from

state \(i\) to state \(j\) and \(k\) is the number of states (here \(k=3\)):

\begin{equation} \hat{p}_{i,j} = \frac{n_{i,j}}{\sum_{q=1}^k n_{i,q} } \end{equation}

[7]:

print(m.p)

[[0.25 0.5 0.25 ]

[0.33333333 0. 0.66666667]

[0.33333333 0.33333333 0.33333333]]

This means that if any of the 5 pixels was in state "c", the probability of staying at "c" or transitioning to any other states ("a", "b") in the next period is the same (0.333). If a pixel was in state "b", there is a high possibility that it would take on state "c" in the next period because \(\hat{p}_{2,3}=0.667\).

[8]:

m.steady_state # steady state distribution

[8]:

array([0.30769231, 0.28846154, 0.40384615])

This simple example illustrates the basic creation of a Markov instance, but the small sample size makes it unrealistic for the more advanced features of this approach. For a larger example, we will look at an application of Markov methods to understanding regional income dynamics in the US. Here we will load in data on per capita incomes observed annually from 1929 to 2010 for the lower 48 US states:

Regional income dynamics in the US¶

Firstly, we load in data on per capita incomes observed annually from 1929 to 2009 for the lower 48 US states. We use the example dataset in libpysal which was downloaded from US Bureau of Economic Analysis.

[9]:

import libpysal

f = libpysal.io.open(libpysal.examples.get_path("usjoin.csv"))

pci = np.array([f.by_col[str(y)] for y in range(1929, 2010)])

print(pci.shape)

(81, 48)

The first row of the array is the per capita incomes for the 48 US states for the year 1929:

[10]:

print(pci[0, :])

[ 323 600 310 991 634 1024 1032 518 347 507 948 607 581 532

393 414 601 768 906 790 599 286 621 592 596 868 686 918

410 1152 332 382 771 455 668 772 874 271 426 378 479 551

634 434 741 460 673 675]

In order to apply the classic Markov approach to this series, we first have to discretize the distribution by defining our classes. There are many ways to do this including quantiles classification scheme, equal interval classification scheme, Fisher Jenks classification scheme, etc. For a list of classification methods, please refer to the pysal package mapclassify.

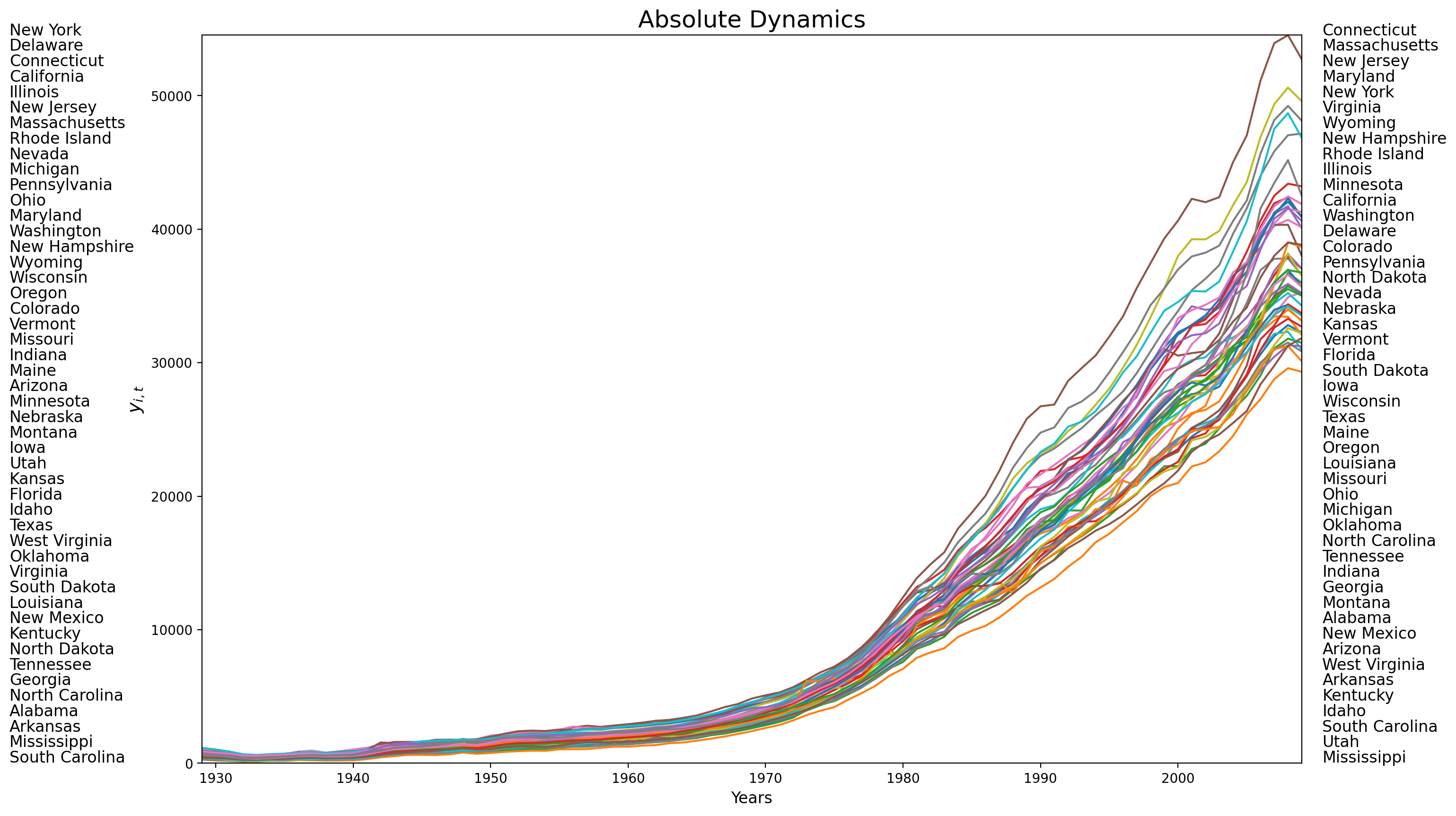

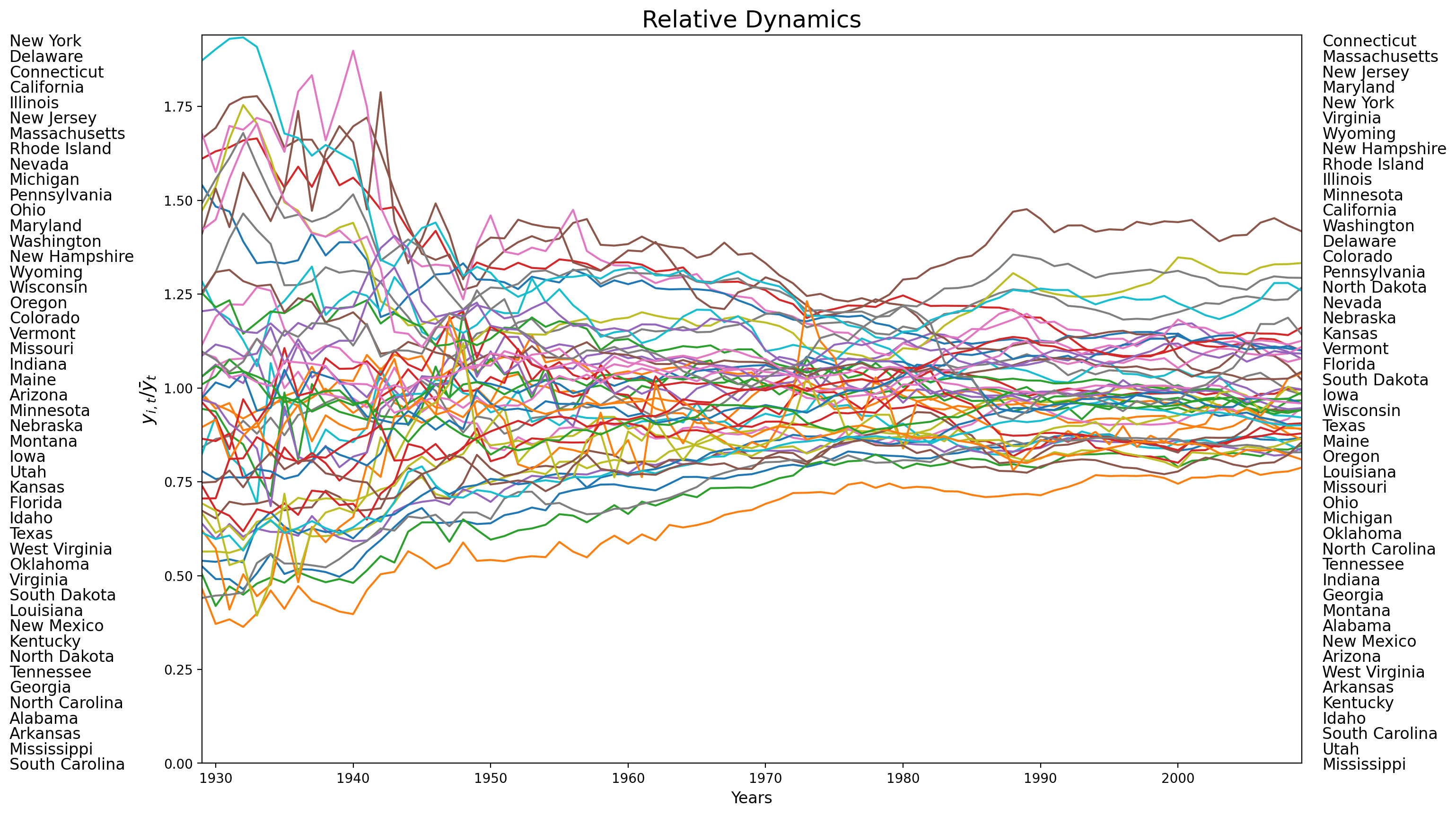

Here we will use the quintiles for each annual income distribution to define the classes. It should be noted that using quintiles for the pooled income distribution to define the classes will result in a different interpretation of the income dynamics. Quintiles for each annual income distribution (the former) will reveal more of relative income dynamics while those for the pooled income distribution (the latter) will provide insights in absolute dynamics.

[11]:

import matplotlib.pyplot as plt

%matplotlib inline

years = range(1929, 2010)

names = np.array(f.by_col("Name"))

order1929 = np.argsort(pci[0, :])

order2009 = np.argsort(pci[-1, :])

names1929 = names[order1929[::-1]]

names2009 = names[order2009[::-1]]

first_last = np.vstack((names1929, names2009))

from pylab import rcParams

rcParams["figure.figsize"] = 15, 10

plt.plot(years, pci)

for i in range(48):

plt.text(1915, 54530 - (i * 1159), first_last[0][i], fontsize=12)

plt.text(2010.5, 54530 - (i * 1159), first_last[1][i], fontsize=12)

plt.xlim((years[0], years[-1]))

plt.ylim((0, 54530))

plt.ylabel(r"$y_{i,t}$", fontsize=14)

plt.xlabel("Years", fontsize=12)

plt.title("Absolute Dynamics", fontsize=18)

[11]:

Text(0.5, 1.0, 'Absolute Dynamics')

[12]:

years = range(1929, 2010)

rpci = (pci.T / pci.mean(axis=1)).T

names = np.array(f.by_col("Name"))

order1929 = np.argsort(rpci[0, :])

order2009 = np.argsort(rpci[-1, :])

names1929 = names[order1929[::-1]]

names2009 = names[order2009[::-1]]

first_last = np.vstack((names1929, names2009))

rcParams["figure.figsize"] = 15, 10

plt.plot(years, rpci)

for i in range(48):

plt.text(1915, 1.91 - (i * 0.041), first_last[0][i], fontsize=12)

plt.text(2010.5, 1.91 - (i * 0.041), first_last[1][i], fontsize=12)

plt.xlim((years[0], years[-1]))

plt.ylim((0, 1.94))

plt.ylabel(r"$y_{i,t}/\bar{y}_t$", fontsize=14)

plt.xlabel("Years", fontsize=12)

plt.title("Relative Dynamics", fontsize=18)

[12]:

Text(0.5, 1.0, 'Relative Dynamics')

[13]:

import mapclassify as mc

q5 = np.array([mc.Quantiles(y, k=5).yb for y in pci]).transpose()

print(q5[:, 0])

[0 2 0 4 2 4 4 1 0 1 4 2 2 1 0 1 2 3 4 4 2 0 2 2 2 4 3 4 0 4 0 0 3 1 3 3 4

0 1 0 1 2 2 1 3 1 3 3]

[14]:

print(f.by_col("Name"))

['Alabama', 'Arizona', 'Arkansas', 'California', 'Colorado', 'Connecticut', 'Delaware', 'Florida', 'Georgia', 'Idaho', 'Illinois', 'Indiana', 'Iowa', 'Kansas', 'Kentucky', 'Louisiana', 'Maine', 'Maryland', 'Massachusetts', 'Michigan', 'Minnesota', 'Mississippi', 'Missouri', 'Montana', 'Nebraska', 'Nevada', 'New Hampshire', 'New Jersey', 'New Mexico', 'New York', 'North Carolina', 'North Dakota', 'Ohio', 'Oklahoma', 'Oregon', 'Pennsylvania', 'Rhode Island', 'South Carolina', 'South Dakota', 'Tennessee', 'Texas', 'Utah', 'Vermont', 'Virginia', 'Washington', 'West Virginia', 'Wisconsin', 'Wyoming']

A number of things need to be noted here. First, we are relying on the classification methods in mapclassify for defining our quintiles. The class Quantiles uses quintiles (\(k=5\)) as the default and will create an instance of this class that has multiple attributes, the one we are extracting in the first line is \(yb\) - the class id for each observation. The second thing to note is the transpose operator which gets our resulting array q5 in the proper structure required

for use of Markov. Thus we see that the first spatial unit (Alabama with an income of 323) fell in the first quintile in 1929, while the last unit (Wyoming with an income of 675) fell in the fourth quintile.

So now we have a time series for each state of its quintile membership. For example, Colorado’s quintile time series is:

[15]:

print(q5[4, :])

[2 3 2 2 3 2 2 3 2 2 2 2 2 2 2 2 3 2 3 2 3 2 3 3 3 2 2 3 3 3 3 3 3 3 3 3 3

2 2 2 3 3 3 3 3 3 3 3 3 3 3 4 4 4 4 4 4 3 3 3 3 3 3 3 3 3 4 4 4 4 4 4 4 4

3 3 3 4 3 3 3]

indicating that it has occupied the 3rd, 4th, and 5th quintiles in the distribution at the first 3 periods. To summarize the transition dynamics for all units, we instantiate a Markov object:

[16]:

m5 = giddy.markov.Markov(q5)

The Markov Chain is irreducible and is composed by:

1 Recurrent class (indices):

[0 1 2 3 4]

0 Transient classes.

The Markov Chain has 0 absorbing states.

The number of transitions between any two quintile classes could be counted:

[17]:

print(m5.transitions)

[[729. 71. 1. 0. 0.]

[ 72. 567. 80. 3. 0.]

[ 0. 81. 631. 86. 2.]

[ 0. 3. 86. 573. 56.]

[ 0. 0. 1. 57. 741.]]

By assuming the first-order Markov property, time homogeneity, spatial homogeneity and spatial independence, a transition probability matrix could be estimated which holds for all the 48 US states across 1929-2010:

[18]:

print(m5.p)

[[0.91011236 0.0886392 0.00124844 0. 0. ]

[0.09972299 0.78531856 0.11080332 0.00415512 0. ]

[0. 0.10125 0.78875 0.1075 0.0025 ]

[0. 0.00417827 0.11977716 0.79805014 0.07799443]

[0. 0. 0.00125156 0.07133917 0.92740926]]

The fact that each of the 5 diagonal elements is larger than \(0.78\) indicates a high stability of US regional income dynamics system.

Another very important feature of DMC model is the steady state distribution \(\pi\) (also called limiting distribution) defined as \(\pi p = \pi\). The attribute steady_state gives \(\pi\) as follows:

[19]:

print(m5.steady_state)

[0.20774716 0.18725774 0.20740537 0.18821787 0.20937187]

If the distribution at \(t\) is a steady state distribution as shown above, then any distribution afterwards is the same distribution.

With the transition probability matrix in hand, we can estimate the mean first passage time which is the average number of steps to go from a state/class to another state for the first time:

[20]:

print(giddy.ergodic.mfpt(m5.p))

[[ 4.81354357 11.50292712 29.60921231 53.38594954 103.59816743]

[ 42.04774505 5.34023324 18.74455332 42.50023268 92.71316899]

[ 69.25849753 27.21075248 4.82147603 25.27184624 75.43305672]

[ 84.90689329 42.85914824 17.18082642 5.31299186 51.60953369]

[ 98.41295543 56.36521038 30.66046735 14.21158356 4.77619083]]

Thus, for a state with income in the first quintile, it takes on average 11.5 years for it to first enter the second quintile, 29.6 to get to the third quintile, 53.4 years to enter the fourth, and 103.6 years to reach the richest quintile.

Regional context and Moran’s Is¶

Thus far we have treated all the spatial units as independent to estimate the transition probabilities. This hides an implicit assumption: the movement of a spatial unit in the income distribution is independent of the movement of its neighbors or the position of the neighbors in the distribution. But what if spatial context matters??

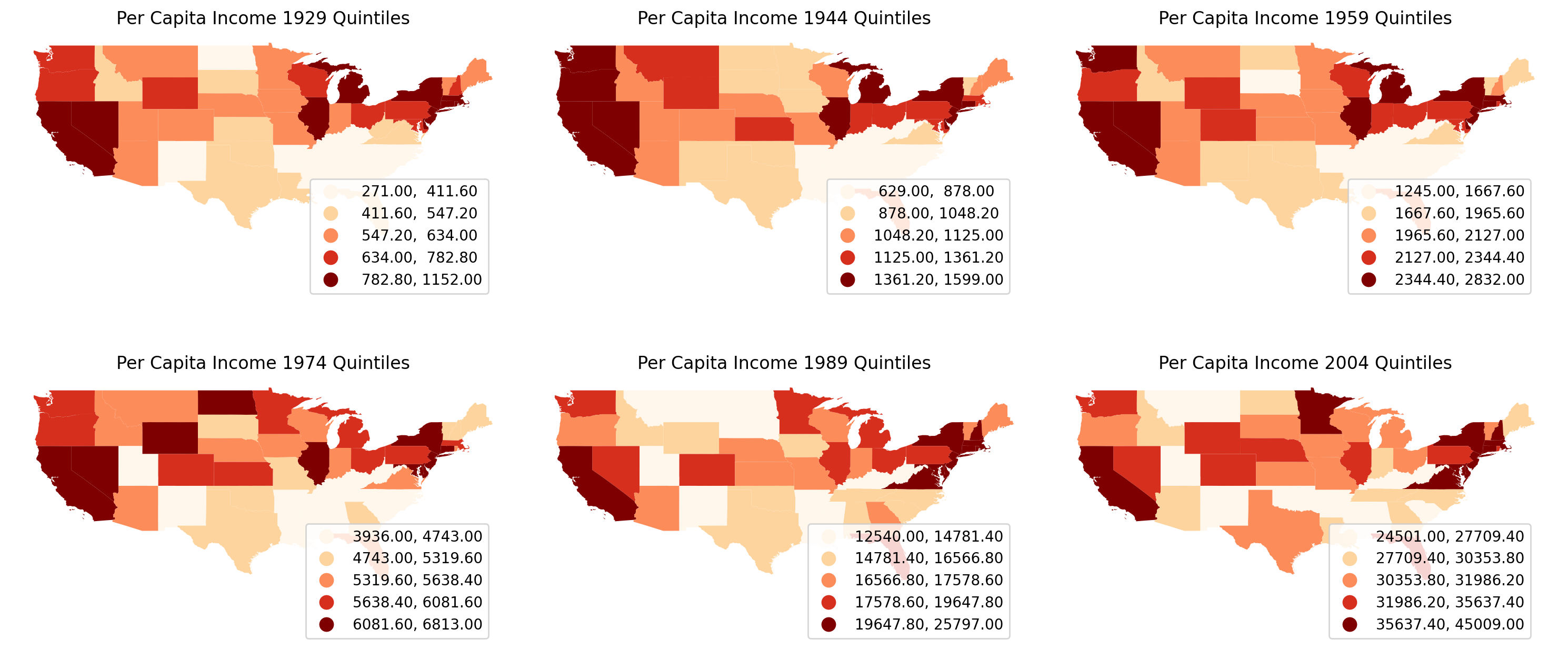

We could plot the choropleth maps of per capita incomes of US states to get a first impression of the spatial distribution.

[21]:

import geopandas as gpd

import pandas as pd

[22]:

geo_table = gpd.read_file(libpysal.examples.get_path("us48.shp"))

income_table = pd.read_csv(libpysal.examples.get_path("usjoin.csv"))

complete_table = geo_table.merge(income_table, left_on="STATE_NAME", right_on="Name")

complete_table.head()

[22]:

| AREA | PERIMETER | STATE_ | STATE_ID | STATE_NAME | STATE_FIPS_x | SUB_REGION | STATE_ABBR | geometry | Name | ... | 2000 | 2001 | 2002 | 2003 | 2004 | 2005 | 2006 | 2007 | 2008 | 2009 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 20.750 | 34.956 | 1 | 1 | Washington | 53 | Pacific | WA | MULTIPOLYGON (((-122.40075 48.22540, -122.4615... | Washington | ... | 31528 | 32053 | 32206 | 32934 | 34984 | 35738 | 38477 | 40782 | 41588 | 40619 |

| 1 | 45.132 | 34.527 | 2 | 2 | Montana | 30 | Mtn | MT | POLYGON ((-111.47463 44.70224, -111.48001 44.6... | Montana | ... | 22569 | 24342 | 24699 | 25963 | 27517 | 28987 | 30942 | 32625 | 33293 | 32699 |

| 2 | 9.571 | 18.899 | 3 | 3 | Maine | 23 | N Eng | ME | MULTIPOLYGON (((-69.77779 44.07407, -69.86044 ... | Maine | ... | 25623 | 27068 | 27731 | 28727 | 30201 | 30721 | 32340 | 33620 | 34906 | 35268 |

| 3 | 21.874 | 21.353 | 4 | 4 | North Dakota | 38 | W N Cen | ND | POLYGON ((-98.73006 45.93830, -99.00645 45.939... | North Dakota | ... | 25068 | 26118 | 26770 | 29109 | 29676 | 31644 | 32856 | 35882 | 39009 | 38672 |

| 4 | 22.598 | 22.746 | 5 | 5 | South Dakota | 46 | W N Cen | SD | POLYGON ((-102.78793 42.99532, -103.00541 42.9... | South Dakota | ... | 26115 | 27531 | 27727 | 30072 | 31765 | 32726 | 33320 | 35998 | 38188 | 36499 |

5 rows × 92 columns

[23]:

with warnings.catch_warnings():

warnings.simplefilter("ignore")

# ignore geopandas/plotting.py:732:

# FutureWarning: is_categorical_dtype is deprecated and will be removed in a future version.

index_year = range(1929, 2010, 15)

fig, axes = plt.subplots(nrows=2, ncols=3, figsize=(15, 7))

for i in range(2):

for j in range(3):

ax = axes[i, j]

complete_table.plot(

ax=ax,

column=str(index_year[i * 3 + j]),

cmap="OrRd",

scheme="quantiles",

legend=True,

)

ax.set_title(f"Per Capita Income {index_year[i*3+j]} Quintiles")

ax.axis("off")

leg = ax.get_legend()

leg.set_bbox_to_anchor((0.8, 0.15, 0.16, 0.2))

plt.tight_layout()

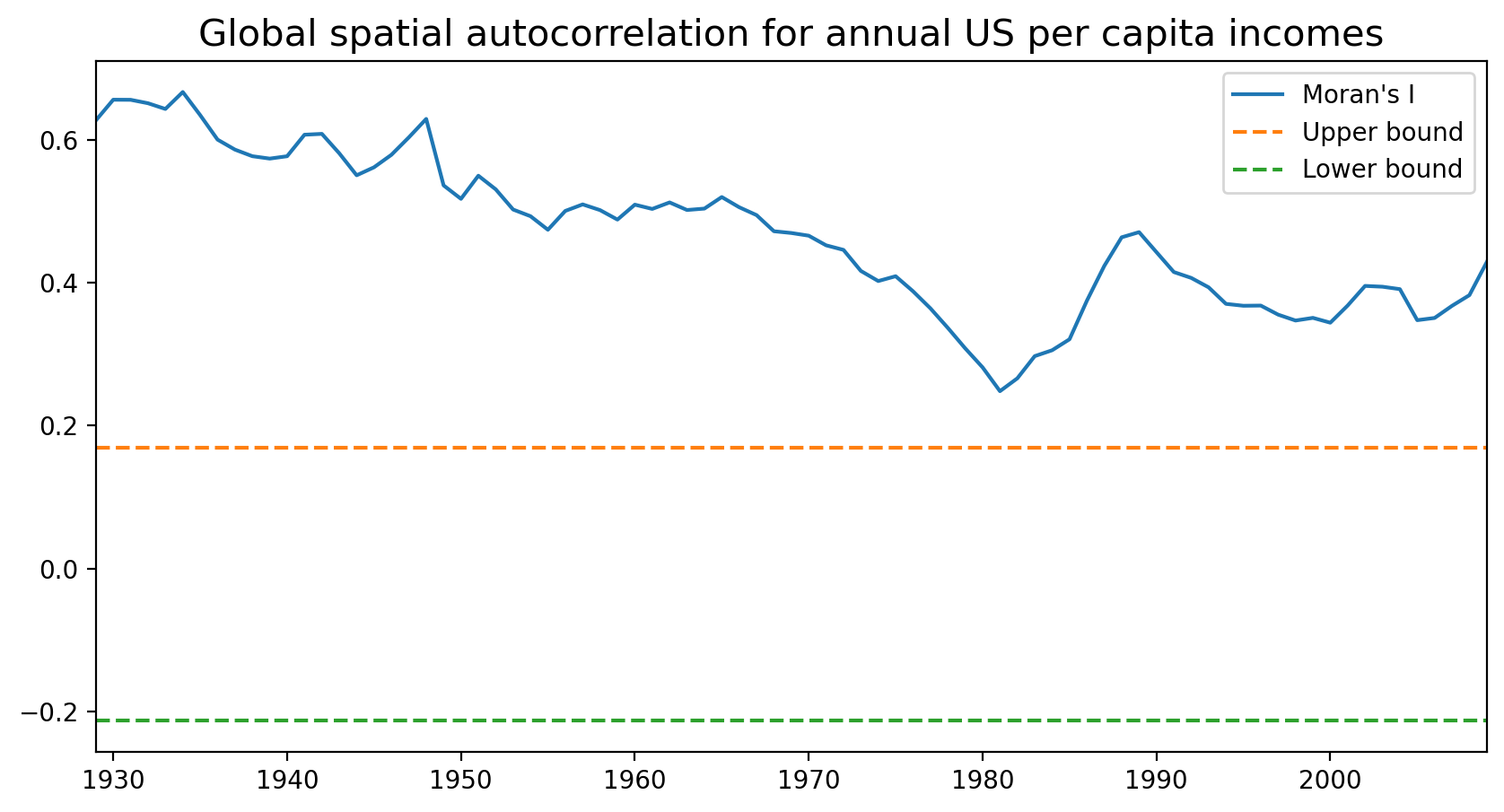

It is quite obvious that the per capita incomes are not randomly distributed: we could spot clusters in the mid-south, south-east and north-east. Let’s proceed to calculate Moran’s I, a widely used measure of global spatial autocorrelation, to aid the visual interpretation.

[24]:

from esda.moran import Moran

import matplotlib.pyplot as plt

%matplotlib inline

w = libpysal.io.open(libpysal.examples.get_path("states48.gal")).read()

w.transform = "R"

mits = [Moran(cs, w) for cs in pci]

res = np.array([(mi.I, mi.EI, mi.seI_norm, mi.sim[974]) for mi in mits])

years = np.arange(1929, 2010)

fig, ax = plt.subplots(nrows=1, ncols=1, figsize=(10, 5))

ax.plot(years, res[:, 0], label="Moran's I")

ax.plot(years, res[:, 1] + 1.96 * res[:, 2], label="Upper bound", linestyle="dashed")

ax.plot(years, res[:, 1] - 1.96 * res[:, 2], label="Lower bound", linestyle="dashed")

ax.set_title(

"Global spatial autocorrelation for annual US per capita incomes",

fontdict={"fontsize": 15},

)

ax.set_xlim([1929, 2009])

ax.legend()

[24]:

<matplotlib.legend.Legend at 0x16bcd6990>

From the above figure we could observe that Moran’s I value was always positive and significant for each year across 1929-2009. In other words, US regional income series are not independent of each other and regional context could be important in shaping the regional income dynamics. However, the classic Markov approach is silent on this issue. We turn to the spatially explict Markov methods - Spatial Markov and LISA Markov - for an explicit incorporation of space in understanding US regional income distribution dynamics.

Spatial Markov¶

giddy.markov.Spatial_Markov(

y, w, k=4, m=4, permutations=0, fixed=True, discrete=False, cutoffs=None, lag_cutoffs=None, variable_name=None

)

Spatial Markov is an extension to class Markov allowing for a more comprehensive analysis of the spatial dimensions of the transitional dynamics (Rey, 2001). Here, whether the transition probabilities are dependent on regional context is investigated and quantified. Rather than estimating one transition probability matrix, spatial Markov requires estimation of \(k\) transition probability matrices, each of which is conditional on the regional context at the preceding period. The regional context is usually formalized by spatial lag - the weighted average income level of neighbors:

where \(W\) is the spatial weight matrix and \(w_{r,s}\) represents the weight that spatial unit \(s\) contributes to the local context of spatial unit \(r\) at time period \(t\).

Similar to the construction of a Markov instance, we could create a Spatial Markov instance by utilizing the Spatial_Markov class in giddy. The only difference between the adoption of Markov and Spatial_Markov class is that the latter accepts the original continuous income data while the former requires a pre-classification/discretization. In other words, here we do not need to apply the classification methods in mapclassify as we did earlier. In fact, the Spatial

Markov class nested the quantile classification methods and all we need to do is set the desired number of classes k when creating the Spatial_Markov instance. Here, we set k=5 (quintile classes) as before.

Different from before, quintiles are defined for the pooled relative incomes (by standardizing by each period by the mean). This is achieved by setting the parameter fixed=True.

[25]:

?giddy.markov.Spatial_Markov

Init signature:

giddy.markov.Spatial_Markov(

y,

w,

k=4,

m=4,

permutations=0,

fixed=True,

discrete=False,

cutoffs=None,

lag_cutoffs=None,

variable_name=None,

fill_empty_classes=False,

)

Docstring:

Markov transitions conditioned on the value of the spatial lag.

Parameters

----------

y : array

(n, t), one row per observation, one column per state of

each observation, with as many columns as time periods.

w : W

spatial weights object.

k : integer, optional

number of classes (quantiles) for input time series y.

Default is 4. If discrete=True, k is determined

endogenously.

m : integer, optional

number of classes (quantiles) for the spatial lags of

regional time series. Default is 4. If discrete=True,

m is determined endogenously.

permutations : int, optional

number of permutations for use in randomization based

inference (the default is 0).

fixed : bool, optional

If true, discretization are taken over the entire n*t

pooled series and cutoffs can be user-defined. If

cutoffs and lag_cutoffs are not given, quantiles are

used. If false, quantiles are taken each time period

over n. Default is True.

discrete : bool, optional

If true, categorical spatial lags which are most common

categories of neighboring observations serve as the

conditioning and fixed is ignored; if false, weighted

averages of neighboring observations are used. Default is

false.

cutoffs : array, optional

users can specify the discretization cutoffs for

continuous time series. Default is None, meaning that

quantiles will be used for the discretization.

lag_cutoffs : array, optional

users can specify the discretization cutoffs for the

spatial lags of continuous time series. Default is

None, meaning that quantiles will be used for the

discretization.

variable_name : string

name of variable.

fill_empty_classes: bool

If True, assign 1 to diagonal elements which fall in rows

full of 0s to ensure each conditional transition

probability matrix is a stochastic matrix (each row

sums up to 1). In other words, the probability of

staying at that state is 1.

Attributes

----------

class_ids : array

(n, t), discretized series if y is continuous. Otherwise

it is identical to y.

classes : array

(k, 1), all different classes (bins).

lclass_ids : array

(n, t), spatial lag series.

lclasses : array

(k, 1), all different classes (bins) for

spatial lags.

p : array

(k, k), transition probability matrix for a-spatial

Markov.

s : array

(k, ), steady state distribution for a-spatial Markov.

f : array

(k, k), first mean passage times for a-spatial Markov.

transitions : array

(k, k), counts of transitions between each state i and j

for a-spatial Markov.

T : array

(m, k, k), counts of transitions for each conditional

Markov. T[0] is the matrix of transitions for

observations with lags in the 0th quantile; T[m-1] is the

transitions for the observations with lags in the m-1th.

P : array

(m, k, k), transition probability matrix for spatial

Markov first dimension is the conditioned on the lag.

S : arraylike

(m, k), steady state distributions for spatial Markov.

Each row is a conditional steady state distribution.

If one (or more) spatially conditional Markov chain is

reducible (having more than 1 steady state distribution),

this attribute is an array of m arrays of varying

dimensions.

F : array

(m, k, k),first mean passage times.

First dimension is conditioned on the spatial lag.

shtest : list

(k elements), each element of the list is a tuple for a

multinomial difference test between the steady state

distribution from a conditional distribution versus the

overall steady state distribution: first element of the

tuple is the chi2 value, second its p-value and the third

the degrees of freedom.

chi2 : list

(k elements), each element of the list is a tuple for a

chi-squared test of the difference between the

conditional transition matrix against the overall

transition matrix: first element of the tuple is the chi2

value, second its p-value and the third the degrees of

freedom.

x2 : float

sum of the chi2 values for each of the conditional tests.

Has an asymptotic chi2 distribution with k(k-1)(k-1)

degrees of freedom. Under the null that transition

probabilities are spatially homogeneous.

(see chi2 above)

x2_dof : int

degrees of freedom for homogeneity test.

x2_pvalue : float

pvalue for homogeneity test based on analytic.

distribution

x2_rpvalue : float

(if permutations>0)

pseudo p-value for x2 based on random spatial

permutations of the rows of the original transitions.

x2_realizations : array

(permutations,1), the values of x2 for the random

permutations.

Q : float

Chi-square test of homogeneity across lag classes based

on :cite:`Bickenbach2003`.

Q_p_value : float

p-value for Q.

LR : float

Likelihood ratio statistic for homogeneity across lag

classes based on :cite:`Bickenbach2003`.

LR_p_value : float

p-value for LR.

dof_hom : int

degrees of freedom for LR and Q, corrected for 0 cells.

Notes

-----

Based on :cite:`Rey2001`.

The shtest and chi2 tests should be used with caution as they are based on

classic theory assuming random transitions. The x2 based test is

preferable since it simulates the randomness under the null. It is an

experimental test requiring further analysis.

Examples

--------

>>> import libpysal

>>> from giddy.markov import Spatial_Markov

>>> import numpy as np

>>> f = libpysal.io.open(libpysal.examples.get_path("usjoin.csv"))

>>> pci = np.array([f.by_col[str(y)] for y in range(1929,2010)])

>>> pci = pci.transpose()

>>> rpci = pci/(pci.mean(axis=0))

>>> w = libpysal.io.open(libpysal.examples.get_path("states48.gal")).read()

>>> w.transform = 'r'

Now we create a `Spatial_Markov` instance for the continuous relative per

capita income time series for 48 US lower states 1929-2009. The current

implementation allows users to classify the continuous incomes in a more

flexible way.

(1) Global quintiles to discretize the income data (k=5), and global

quintiles to discretize the spatial lags of incomes (m=5).

>>> sm = Spatial_Markov(rpci, w, fixed=True, k=5, m=5, variable_name='rpci')

We can examine the cutoffs for the incomes and cutoffs for the spatial lags

>>> sm.cutoffs

array([0.83999133, 0.94707545, 1.03242697, 1.14911154])

>>> sm.lag_cutoffs

array([0.88973386, 0.95891917, 1.01469758, 1.1183566 ])

Obviously, they are slightly different.

We now look at the estimated spatially lag conditioned transition

probability matrices.

>>> for p in sm.P:

... print(p)

[[0.96341463 0.0304878 0.00609756 0. 0. ]

[0.06040268 0.83221477 0.10738255 0. 0. ]

[0. 0.14 0.74 0.12 0. ]

[0. 0.03571429 0.32142857 0.57142857 0.07142857]

[0. 0. 0. 0.16666667 0.83333333]]

[[0.79831933 0.16806723 0.03361345 0. 0. ]

[0.0754717 0.88207547 0.04245283 0. 0. ]

[0.00537634 0.06989247 0.8655914 0.05913978 0. ]

[0. 0. 0.06372549 0.90196078 0.03431373]

[0. 0. 0. 0.19444444 0.80555556]]

[[0.84693878 0.15306122 0. 0. 0. ]

[0.08133971 0.78947368 0.1291866 0. 0. ]

[0.00518135 0.0984456 0.79274611 0.0984456 0.00518135]

[0. 0. 0.09411765 0.87058824 0.03529412]

[0. 0. 0. 0.10204082 0.89795918]]

[[0.8852459 0.09836066 0. 0.01639344 0. ]

[0.03875969 0.81395349 0.13953488 0. 0.00775194]

[0.0049505 0.09405941 0.77722772 0.11881188 0.0049505 ]

[0. 0.02339181 0.12865497 0.75438596 0.09356725]

[0. 0. 0. 0.09661836 0.90338164]]

[[0.33333333 0.66666667 0. 0. 0. ]

[0.0483871 0.77419355 0.16129032 0.01612903 0. ]

[0.01149425 0.16091954 0.74712644 0.08045977 0. ]

[0. 0.01036269 0.06217617 0.89637306 0.03108808]

[0. 0. 0. 0.02352941 0.97647059]]

The probability of a poor state remaining poor is 0.963 if their

neighbors are in the 1st quintile and 0.798 if their neighbors are

in the 2nd quintile. The probability of a rich economy remaining

rich is 0.976 if their neighbors are in the 5th quintile, but if their

neighbors are in the 4th quintile this drops to 0.903.

The global transition probability matrix is estimated:

>>> print(sm.p)

[[0.91461837 0.07503234 0.00905563 0.00129366 0. ]

[0.06570302 0.82654402 0.10512484 0.00131406 0.00131406]

[0.00520833 0.10286458 0.79427083 0.09505208 0.00260417]

[0. 0.00913838 0.09399478 0.84856397 0.04830287]

[0. 0. 0. 0.06217617 0.93782383]]

The Q and likelihood ratio statistics are both significant indicating

the dynamics are not homogeneous across the lag classes:

>>> "%.3f"%sm.LR

'170.659'

>>> "%.3f"%sm.Q

'200.624'

>>> "%.3f"%sm.LR_p_value

'0.000'

>>> "%.3f"%sm.Q_p_value

'0.000'

>>> sm.dof_hom

60

The long run distribution for states with poor (rich) neighbors has

0.435 (0.018) of the values in the first quintile, 0.263 (0.200) in

the second quintile, 0.204 (0.190) in the third, 0.0684 (0.255) in the

fourth and 0.029 (0.337) in the fifth quintile.

>>> sm.S.astype(float).round(8)

array([[0.43509425, 0.2635327 , 0.20363044, 0.06841983, 0.02932278],

[0.13391287, 0.33993305, 0.25153036, 0.23343016, 0.04119356],

[0.12124869, 0.21137444, 0.2635101 , 0.29013417, 0.1137326 ],

[0.0776413 , 0.19748806, 0.25352636, 0.22480415, 0.24654013],

[0.01776781, 0.19964349, 0.19009833, 0.25524697, 0.3372434 ]])

States with incomes in the first quintile with neighbors in the

first quintile return to the first quartile after 2.298 years, after

leaving the first quintile. They enter the fourth quintile after

80.810 years after leaving the first quintile, on average.

Poor states within neighbors in the fourth quintile return to the

first quintile, on average, after 12.88 years, and would enter the

fourth quintile after 28.473 years.

>>> for f in sm.F:

... print(f.round(8))

[[ 2.29835259 28.95614035 46.14285714 80.80952381 279.42857143]

[ 33.86549708 3.79459555 22.57142857 57.23809524 255.85714286]

[ 43.60233918 9.73684211 4.91085714 34.66666667 233.28571429]

[ 46.62865497 12.76315789 6.25714286 14.61564626 198.61904762]

[ 52.62865497 18.76315789 12.25714286 6. 34.1031746 ]]

[[ 7.46754205 9.70574606 25.76785714 74.53116883 194.23446197]

[ 27.76691978 2.94175577 24.97142857 73.73474026 193.4380334 ]

[ 53.57477715 28.48447637 3.97566318 48.76331169 168.46660482]

[ 72.03631562 46.94601483 18.46153846 4.28393653 119.70329314]

[ 77.17917276 52.08887197 23.6043956 5.14285714 24.27564033]]

[[ 8.24751154 6.53333333 18.38765432 40.70864198 112.76732026]

[ 47.35040872 4.73094099 11.85432099 34.17530864 106.23398693]

[ 69.42288828 24.76666667 3.794921 22.32098765 94.37966594]

[ 83.72288828 39.06666667 14.3 3.44668119 76.36702977]

[ 93.52288828 48.86666667 24.1 9.8 8.79255406]]

[[ 12.87974382 13.34847151 19.83446328 28.47257282 55.82395142]

[ 99.46114206 5.06359731 10.54545198 23.05133495 49.68944423]

[117.76777159 23.03735526 3.94436301 15.0843986 43.57927247]

[127.89752089 32.4393006 14.56853107 4.44831643 31.63099455]

[138.24752089 42.7893006 24.91853107 10.35 4.05613474]]

[[ 56.2815534 1.5 10.57236842 27.02173913 110.54347826]

[ 82.9223301 5.00892857 9.07236842 25.52173913 109.04347826]

[ 97.17718447 19.53125 5.26043557 21.42391304 104.94565217]

[127.1407767 48.74107143 33.29605263 3.91777427 83.52173913]

[169.6407767 91.24107143 75.79605263 42.5 2.96521739]]

(2) Global quintiles to discretize the income data (k=5), and global

quartiles to discretize the spatial lags of incomes (m=4).

>>> sm = Spatial_Markov(rpci, w, fixed=True, k=5, m=4, variable_name='rpci')

We can also examine the cutoffs for the incomes and cutoffs for the spatial

lags:

>>> sm.cutoffs

array([0.83999133, 0.94707545, 1.03242697, 1.14911154])

>>> sm.lag_cutoffs

array([0.91440247, 0.98583079, 1.08698351])

We now look at the estimated spatially lag conditioned transition

probability matrices.

>>> for p in sm.P:

... print(p)

[[0.95708955 0.03544776 0.00746269 0. 0. ]

[0.05825243 0.83980583 0.10194175 0. 0. ]

[0. 0.1294964 0.76258993 0.10791367 0. ]

[0. 0.01538462 0.18461538 0.72307692 0.07692308]

[0. 0. 0. 0.14285714 0.85714286]]

[[0.7421875 0.234375 0.0234375 0. 0. ]

[0.08550186 0.85130112 0.06319703 0. 0. ]

[0.00865801 0.06926407 0.86147186 0.05627706 0.004329 ]

[0. 0. 0.05363985 0.92337165 0.02298851]

[0. 0. 0. 0.13432836 0.86567164]]

[[0.95145631 0.04854369 0. 0. 0. ]

[0.06 0.79 0.145 0. 0.005 ]

[0.00358423 0.10394265 0.7921147 0.09677419 0.00358423]

[0. 0.01630435 0.13586957 0.75543478 0.0923913 ]

[0. 0. 0. 0.10204082 0.89795918]]

[[0.16666667 0.66666667 0. 0.16666667 0. ]

[0.03488372 0.80232558 0.15116279 0.01162791 0. ]

[0.00840336 0.13445378 0.70588235 0.1512605 0. ]

[0. 0.01171875 0.08203125 0.87109375 0.03515625]

[0. 0. 0. 0.03434343 0.96565657]]

We now obtain 4 (5,5) spatial lag conditioned transition probability

matrices instead of 5 as in case (1).

The Q and likelihood ratio statistics are still both significant.

>>> "%.3f"%sm.LR

'172.105'

>>> "%.3f"%sm.Q

'321.128'

>>> "%.3f"%sm.LR_p_value

'0.000'

>>> "%.3f"%sm.Q_p_value

'0.000'

>>> sm.dof_hom

45

(3) We can also set the cutoffs for relative incomes and their

spatial lags manually.

For example, we want the defining cutoffs to be [0.8, 0.9, 1, 1.2],

meaning that relative incomes:

* class 0: smaller than 0.8

* class 1: between 0.8 and 0.9

* class 2: between 0.9 and 1.0

* class 3: between 1.0 and 1.2

* class 4: larger than 1.2

>>> cc = np.array([0.8, 0.9, 1, 1.2])

>>> sm = Spatial_Markov(rpci, w, cutoffs=cc, lag_cutoffs=cc, variable_name='rpci')

>>> sm.cutoffs

array([0.8, 0.9, 1. , 1.2])

>>> sm.k

5

>>> sm.lag_cutoffs

array([0.8, 0.9, 1. , 1.2])

>>> sm.m

5

>>> for p in sm.P:

... print(p)

[[0.96703297 0.03296703 0. 0. 0. ]

[0.10638298 0.68085106 0.21276596 0. 0. ]

[0. 0.14285714 0.7755102 0.08163265 0. ]

[0. 0. 0.5 0.5 0. ]

[0. 0. 0. 0. 0. ]]

[[0.88636364 0.10606061 0.00757576 0. 0. ]

[0.04402516 0.89308176 0.06289308 0. 0. ]

[0. 0.05882353 0.8627451 0.07843137 0. ]

[0. 0. 0.13846154 0.86153846 0. ]

[0. 0. 0. 0. 1. ]]

[[0.78082192 0.17808219 0.02739726 0.01369863 0. ]

[0.03488372 0.90406977 0.05813953 0.00290698 0. ]

[0. 0.05919003 0.84735202 0.09034268 0.00311526]

[0. 0. 0.05811623 0.92985972 0.01202405]

[0. 0. 0. 0.14285714 0.85714286]]

[[0.82692308 0.15384615 0. 0.01923077 0. ]

[0.0703125 0.7890625 0.125 0.015625 0. ]

[0.00295858 0.06213018 0.82248521 0.10946746 0.00295858]

[0. 0.00185529 0.07606679 0.88497217 0.03710575]

[0. 0. 0. 0.07803468 0.92196532]]

[[0. 0. 0. 0. 0. ]

[0. 0. 0. 0. 0. ]

[0. 0.06666667 0.9 0.03333333 0. ]

[0. 0. 0.05660377 0.90566038 0.03773585]

[0. 0. 0. 0.03932584 0.96067416]]

(3.1) As we can see from the above estimated conditional transition

probability matrices, some rows are full of zeros and this violate the

requirement that each row of a transition probability matrix sums to 1.

We can easily adjust this assigning `fill_empty_classes = True` when initializing

`Spatial_Markov`.

>>> sm = Spatial_Markov(rpci, w, cutoffs=cc, lag_cutoffs=cc, fill_empty_classes=True)

>>> for p in sm.P:

... print(p)

[[0.96703297 0.03296703 0. 0. 0. ]

[0.10638298 0.68085106 0.21276596 0. 0. ]

[0. 0.14285714 0.7755102 0.08163265 0. ]

[0. 0. 0.5 0.5 0. ]

[0. 0. 0. 0. 1. ]]

[[0.88636364 0.10606061 0.00757576 0. 0. ]

[0.04402516 0.89308176 0.06289308 0. 0. ]

[0. 0.05882353 0.8627451 0.07843137 0. ]

[0. 0. 0.13846154 0.86153846 0. ]

[0. 0. 0. 0. 1. ]]

[[0.78082192 0.17808219 0.02739726 0.01369863 0. ]

[0.03488372 0.90406977 0.05813953 0.00290698 0. ]

[0. 0.05919003 0.84735202 0.09034268 0.00311526]

[0. 0. 0.05811623 0.92985972 0.01202405]

[0. 0. 0. 0.14285714 0.85714286]]

[[0.82692308 0.15384615 0. 0.01923077 0. ]

[0.0703125 0.7890625 0.125 0.015625 0. ]

[0.00295858 0.06213018 0.82248521 0.10946746 0.00295858]

[0. 0.00185529 0.07606679 0.88497217 0.03710575]

[0. 0. 0. 0.07803468 0.92196532]]

[[1. 0. 0. 0. 0. ]

[0. 1. 0. 0. 0. ]

[0. 0.06666667 0.9 0.03333333 0. ]

[0. 0. 0.05660377 0.90566038 0.03773585]

[0. 0. 0. 0.03932584 0.96067416]]

>>> sm.S[0]

array([[0.54148249, 0.16780007, 0.24991499, 0.04080245, 0. ],

[0. , 0. , 0. , 0. , 1. ]])

>>> sm.S[2]

array([0.03607655, 0.22667277, 0.25883041, 0.43607249, 0.04234777])

(4) `Spatial_Markov` also accepts discrete time series and calculates

categorical spatial lags on which several transition probability matrices

are conditioned.

Let's still use the US state income time series to demonstrate. We first

discretize them into categories and then pass them to `Spatial_Markov`.

>>> import mapclassify as mc

>>> y = mc.Quantiles(rpci.flatten(), k=5).yb.reshape(rpci.shape)

>>> np.random.seed(5)

>>> sm = Spatial_Markov(y, w, discrete=True, variable_name='discretized rpci')

>>> sm.k

5

>>> sm.m

5

>>> for p in sm.P:

... print(p)

[[0.94787645 0.04440154 0.00772201 0. 0. ]

[0.08333333 0.81060606 0.10606061 0. 0. ]

[0. 0.12765957 0.79787234 0.07446809 0. ]

[0. 0.02777778 0.22222222 0.66666667 0.08333333]

[0. 0. 0. 0.33333333 0.66666667]]

[[0.888 0.096 0.016 0. 0. ]

[0.06049822 0.84341637 0.09608541 0. 0. ]

[0.00666667 0.10666667 0.81333333 0.07333333 0. ]

[0. 0. 0.08527132 0.86821705 0.04651163]

[0. 0. 0. 0.10204082 0.89795918]]

[[0.65217391 0.32608696 0.02173913 0. 0. ]

[0.07446809 0.80851064 0.11170213 0. 0.00531915]

[0.01071429 0.1 0.76428571 0.11785714 0.00714286]

[0. 0.00552486 0.09392265 0.86187845 0.03867403]

[0. 0. 0. 0.13157895 0.86842105]]

[[0.91935484 0.06451613 0. 0.01612903 0. ]

[0.06796117 0.90291262 0.02912621 0. 0. ]

[0. 0.05755396 0.87769784 0.0647482 0. ]

[0. 0.02150538 0.10752688 0.80107527 0.06989247]

[0. 0. 0. 0.08064516 0.91935484]]

[[0.81818182 0.18181818 0. 0. 0. ]

[0.01754386 0.70175439 0.26315789 0.01754386 0. ]

[0. 0.14285714 0.73333333 0.12380952 0. ]

[0. 0.0042735 0.06837607 0.89316239 0.03418803]

[0. 0. 0. 0.03891051 0.96108949]]

File: ~/giddy/giddy/markov.py

Type: type

Subclasses:

[26]:

# spatial_markov instance o

sm = giddy.markov.Spatial_Markov(rpci.T, w, fixed=True, k=5, m=5)

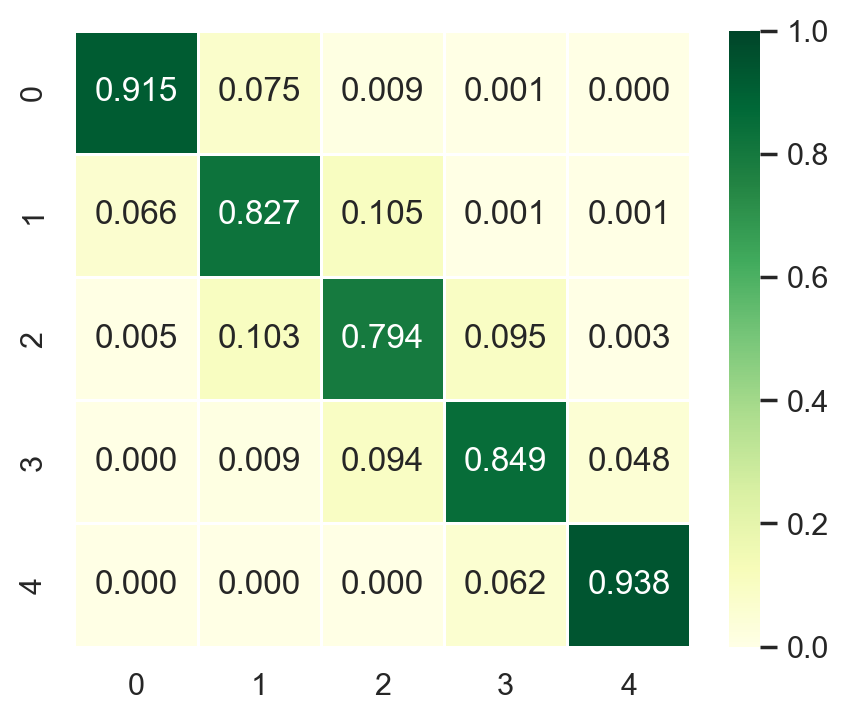

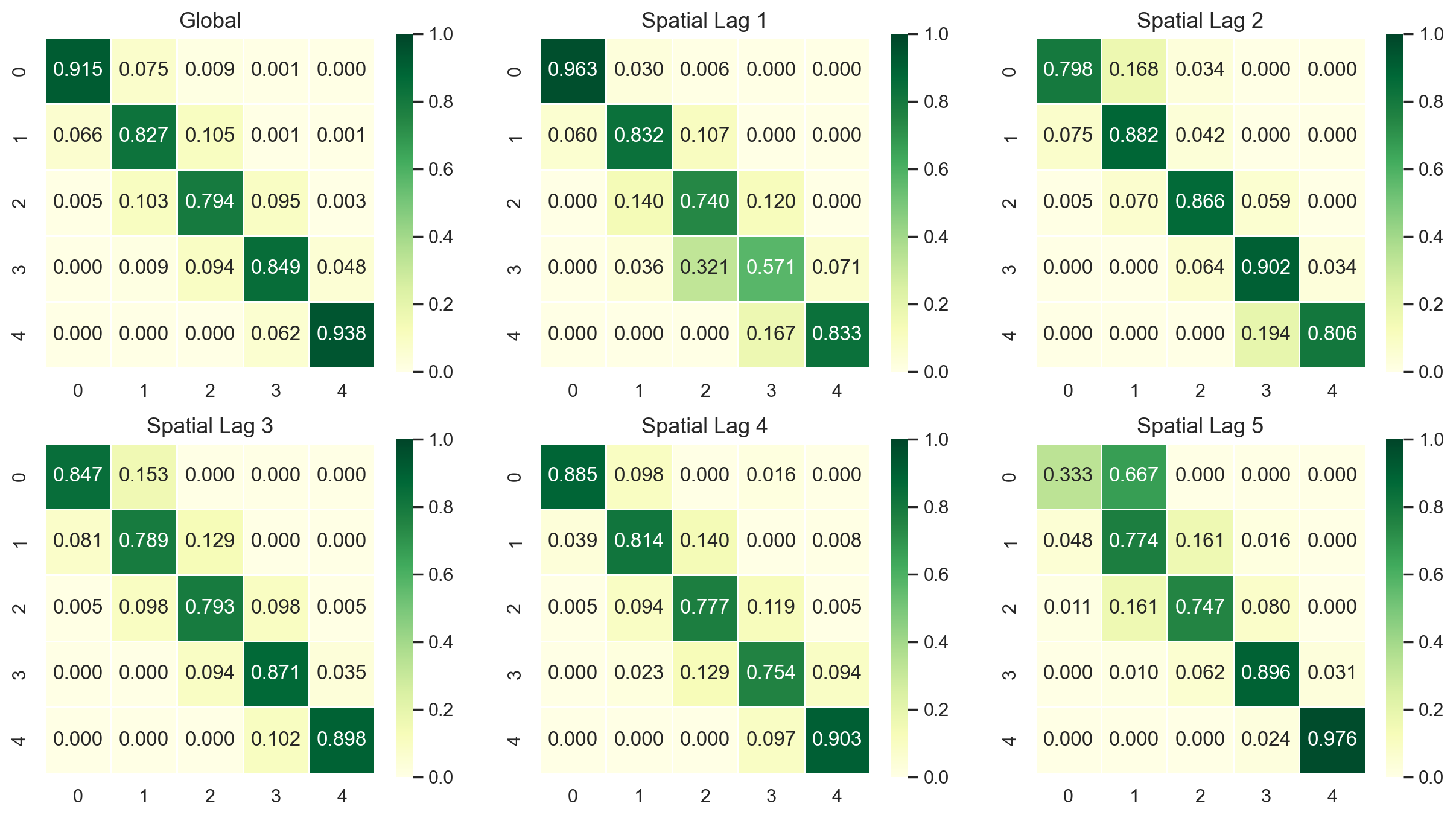

We can next examine the global transition probability matrix for relative incomes.

[27]:

print(sm.p)

[[0.91461837 0.07503234 0.00905563 0.00129366 0. ]

[0.06570302 0.82654402 0.10512484 0.00131406 0.00131406]

[0.00520833 0.10286458 0.79427083 0.09505208 0.00260417]

[0. 0.00913838 0.09399478 0.84856397 0.04830287]

[0. 0. 0. 0.06217617 0.93782383]]

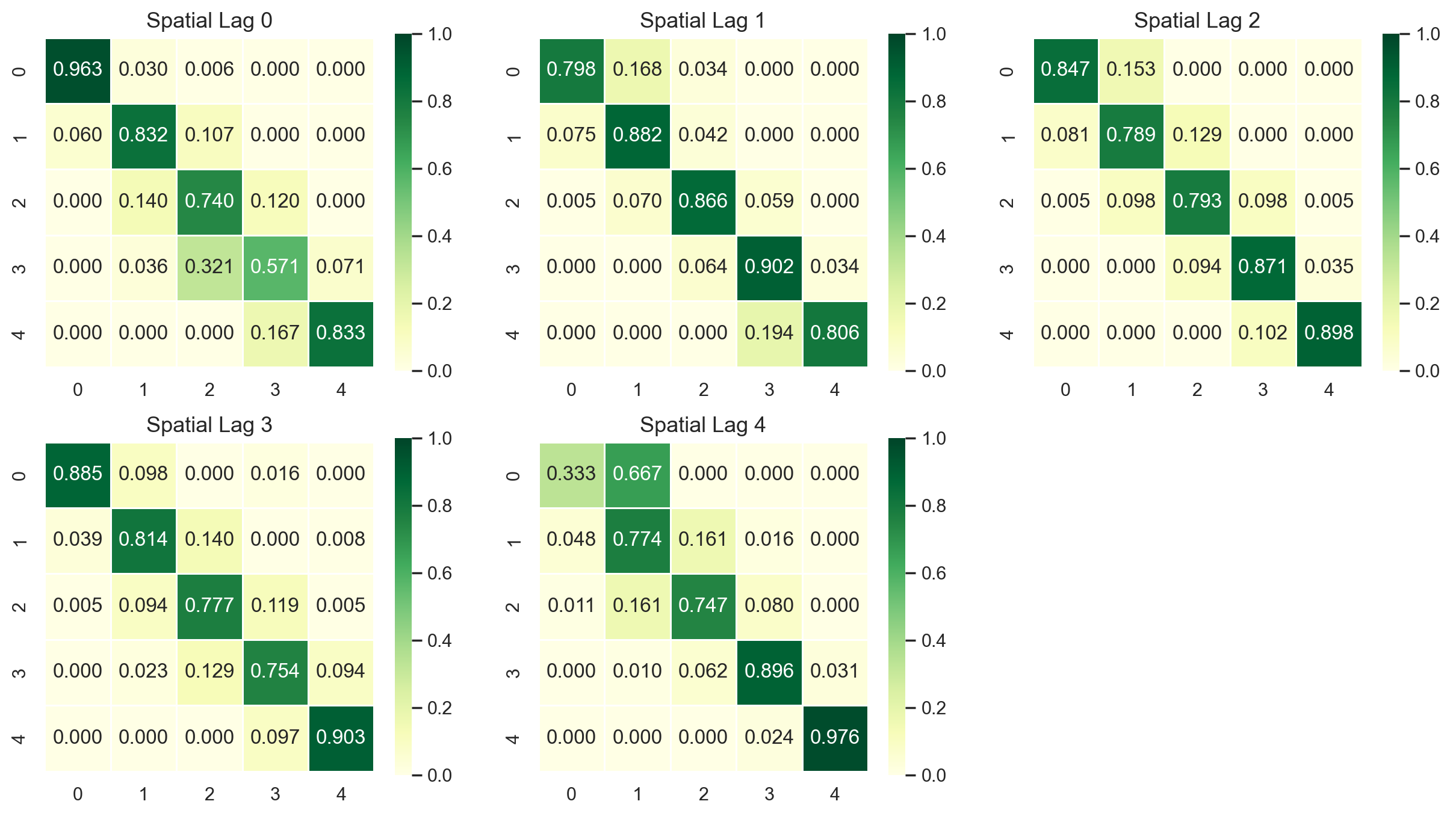

The Spatial Markov allows us to compare the global transition dynamics to those conditioned on regional context. More specifically, the transition dynamics are split across economies who have spatial lags in different quintiles at the preceding year. In our example we have 5 classes, so 5 different conditioned transition probability matrices are estimated - P(LAG0), P(LAG1), P(LAG2), P(LAG3), and P(LAG4).

[28]:

sm.summary()

--------------------------------------------------------------

Spatial Markov Test

--------------------------------------------------------------

Number of classes: 5

Number of transitions: 3840

Number of regimes: 5

Regime names: LAG0, LAG1, LAG2, LAG3, LAG4

--------------------------------------------------------------

Test LR Chi-2

Stat. 170.659 200.624

DOF 60 60

p-value 0.000 0.000

--------------------------------------------------------------

P(H0) C0 C1 C2 C3 C4

C0 0.915 0.075 0.009 0.001 0.000

C1 0.066 0.827 0.105 0.001 0.001

C2 0.005 0.103 0.794 0.095 0.003

C3 0.000 0.009 0.094 0.849 0.048

C4 0.000 0.000 0.000 0.062 0.938

--------------------------------------------------------------

P(LAG0) C0 C1 C2 C3 C4

C0 0.963 0.030 0.006 0.000 0.000

C1 0.060 0.832 0.107 0.000 0.000

C2 0.000 0.140 0.740 0.120 0.000

C3 0.000 0.036 0.321 0.571 0.071

C4 0.000 0.000 0.000 0.167 0.833

--------------------------------------------------------------

P(LAG1) C0 C1 C2 C3 C4

C0 0.798 0.168 0.034 0.000 0.000

C1 0.075 0.882 0.042 0.000 0.000

C2 0.005 0.070 0.866 0.059 0.000

C3 0.000 0.000 0.064 0.902 0.034

C4 0.000 0.000 0.000 0.194 0.806

--------------------------------------------------------------

P(LAG2) C0 C1 C2 C3 C4

C0 0.847 0.153 0.000 0.000 0.000

C1 0.081 0.789 0.129 0.000 0.000

C2 0.005 0.098 0.793 0.098 0.005

C3 0.000 0.000 0.094 0.871 0.035

C4 0.000 0.000 0.000 0.102 0.898

--------------------------------------------------------------

P(LAG3) C0 C1 C2 C3 C4

C0 0.885 0.098 0.000 0.016 0.000

C1 0.039 0.814 0.140 0.000 0.008

C2 0.005 0.094 0.777 0.119 0.005

C3 0.000 0.023 0.129 0.754 0.094

C4 0.000 0.000 0.000 0.097 0.903

--------------------------------------------------------------

P(LAG4) C0 C1 C2 C3 C4

C0 0.333 0.667 0.000 0.000 0.000

C1 0.048 0.774 0.161 0.016 0.000

C2 0.011 0.161 0.747 0.080 0.000

C3 0.000 0.010 0.062 0.896 0.031

C4 0.000 0.000 0.000 0.024 0.976

--------------------------------------------------------------

Visualize the (spatial) Markov transition probability matrix¶

[29]:

# we use seaborn to create a heatmap (`pip install seaborn` to install seaborn if you do not have it)

import seaborn as sns

sns.set()

sns_kws = dict(

annot=True,

linewidths=0.5,

cbar=True,

vmin=0,

vmax=1,

square=True,

cmap="YlGn",

fmt=".3f",

)

fig, ax = plt.subplots(figsize=(5, 4))

im = sns.heatmap(sm.p, ax=ax, **sns_kws)

[30]:

fig, axes = plt.subplots(2, 3, figsize=(15, 8))

for i in range(2):

for j in range(3):

ax = axes[i, j]

if i == 1 and j == 2:

ax.axis("off")

continue

p_temp = sm.P[i * 3 + j]

im = sns.heatmap(p_temp, ax=ax, **sns_kws)

ax.set_title(f"Spatial Lag {i*3+j}", fontsize=13)

[31]:

fig, axes = plt.subplots(2, 3, figsize=(15, 8))

for i in range(2):

for j in range(3):

ax = axes[i, j]

if i == 0 and j == 0:

im = sns.heatmap(sm.p, ax=ax, **sns_kws)

ax.set_title("Global", fontsize=13)

else:

p_temp = sm.P[i * 3 + j - 1]

im = sns.heatmap(p_temp, ax=ax, **sns_kws)

ax.set_title(f"Spatial Lag {i*3+j}", fontsize=13)

The probability of a poor state remaining poor is 0.963 if their neighbors are in the 1st quintile and 0.798 if their neighbors are in the 2nd quintile. The probability of a rich economy remaining rich is 0.977 if their neighbors are in the 5th quintile, but if their neighbors are in the 4th quintile this drops to 0.903.

We can also explore the different steady state distributions implied by these different transition probabilities:

[32]:

print(sm.S)

[[0.43509425180358385 0.26353269723062606 0.20363043984175005

0.06841982778682802 0.02932278333721201]

[0.13391287161229612 0.33993304560752513 0.2515303622306193

0.2334301624671256 0.04119355808243394]

[0.1212486936587668 0.2113744395374709 0.2635100967535313

0.29013417388666013 0.1137325961635708]

[0.0776412957952553 0.19748805820569296 0.2535263610085902

0.22480415152429764 0.24654013346616394]

[0.017767810936691393 0.19964349376114082 0.1900983267207176

0.2552469668219194 0.3372434017595308]]

The long run distribution for states with poor (rich) neighbors has 0.435 (0.018) of the values in the first quintile, 0.263 (0.200) in the second quintile, 0.204 (0.190) in the third, 0.0684 (0.255) in the fourth and 0.029 (0.337) in the fifth quintile. And, finally the spatially conditional mean first passage times:

[33]:

print(sm.F)

[[[ 2.29835259 28.95614035 46.14285714 80.80952381 279.42857143]

[ 33.86549708 3.79459555 22.57142857 57.23809524 255.85714286]

[ 43.60233918 9.73684211 4.91085714 34.66666667 233.28571429]

[ 46.62865497 12.76315789 6.25714286 14.61564626 198.61904762]

[ 52.62865497 18.76315789 12.25714286 6. 34.1031746 ]]

[[ 7.46754205 9.70574606 25.76785714 74.53116883 194.23446197]

[ 27.76691978 2.94175577 24.97142857 73.73474026 193.4380334 ]

[ 53.57477715 28.48447637 3.97566318 48.76331169 168.46660482]

[ 72.03631562 46.94601483 18.46153846 4.28393653 119.70329314]

[ 77.17917276 52.08887197 23.6043956 5.14285714 24.27564033]]

[[ 8.24751154 6.53333333 18.38765432 40.70864198 112.76732026]

[ 47.35040872 4.73094099 11.85432099 34.17530864 106.23398693]

[ 69.42288828 24.76666667 3.794921 22.32098765 94.37966594]

[ 83.72288828 39.06666667 14.3 3.44668119 76.36702977]

[ 93.52288828 48.86666667 24.1 9.8 8.79255406]]

[[ 12.87974382 13.34847151 19.83446328 28.47257282 55.82395142]

[ 99.46114206 5.06359731 10.54545198 23.05133495 49.68944423]

[117.76777159 23.03735526 3.94436301 15.0843986 43.57927247]

[127.89752089 32.4393006 14.56853107 4.44831643 31.63099455]

[138.24752089 42.7893006 24.91853107 10.35 4.05613474]]

[[ 56.2815534 1.5 10.57236842 27.02173913 110.54347826]

[ 82.9223301 5.00892857 9.07236842 25.52173913 109.04347826]

[ 97.17718447 19.53125 5.26043557 21.42391304 104.94565217]

[127.1407767 48.74107143 33.29605263 3.91777427 83.52173913]

[169.6407767 91.24107143 75.79605263 42.5 2.96521739]]]

States in the first income quintile with neighbors in the first quintile return to the first quintile after 2.298 years, after leaving the first quintile. They enter the fourth quintile 80.810 years after leaving the first quintile, on average. Poor states within neighbors in the fourth quintile return to the first quintile, on average, after 12.88 years, and would enter the fourth quintile after 28.473 years.

Tests for this conditional type of spatial dependence include Likelihood Ratio (LR) test and \(\chi^2\) test (Bickenbach and Bode, 2003) as well as a test based on information theory (Kullback et al., 1962). For the first two tests, we could proceed as follows to acquire their statistics, DOF and p-value.

[34]:

giddy.markov.Homogeneity_Results(sm.T).summary()

--------------------------------------------------

Markov Homogeneity Test

--------------------------------------------------

Number of classes: 5

Number of transitions: 3840

Number of regimes: 5

Regime names: 0, 1, 2, 3, 4

--------------------------------------------------

Test LR Chi-2

Stat. 170.659 200.624

DOF 60 60

p-value 0.000 0.000

--------------------------------------------------

P(H0) 0 1 2 3 4

0 0.915 0.075 0.009 0.001 0.000

1 0.066 0.827 0.105 0.001 0.001

2 0.005 0.103 0.794 0.095 0.003

3 0.000 0.009 0.094 0.849 0.048

4 0.000 0.000 0.000 0.062 0.938

--------------------------------------------------

P(0) 0 1 2 3 4

0 0.963 0.030 0.006 0.000 0.000

1 0.060 0.832 0.107 0.000 0.000

2 0.000 0.140 0.740 0.120 0.000

3 0.000 0.036 0.321 0.571 0.071

4 0.000 0.000 0.000 0.167 0.833

--------------------------------------------------

P(1) 0 1 2 3 4

0 0.798 0.168 0.034 0.000 0.000

1 0.075 0.882 0.042 0.000 0.000

2 0.005 0.070 0.866 0.059 0.000

3 0.000 0.000 0.064 0.902 0.034

4 0.000 0.000 0.000 0.194 0.806

--------------------------------------------------

P(2) 0 1 2 3 4

0 0.847 0.153 0.000 0.000 0.000

1 0.081 0.789 0.129 0.000 0.000

2 0.005 0.098 0.793 0.098 0.005

3 0.000 0.000 0.094 0.871 0.035

4 0.000 0.000 0.000 0.102 0.898

--------------------------------------------------

P(3) 0 1 2 3 4

0 0.885 0.098 0.000 0.016 0.000

1 0.039 0.814 0.140 0.000 0.008

2 0.005 0.094 0.777 0.119 0.005

3 0.000 0.023 0.129 0.754 0.094

4 0.000 0.000 0.000 0.097 0.903

--------------------------------------------------

P(4) 0 1 2 3 4

0 0.333 0.667 0.000 0.000 0.000

1 0.048 0.774 0.161 0.016 0.000

2 0.011 0.161 0.747 0.080 0.000

3 0.000 0.010 0.062 0.896 0.031

4 0.000 0.000 0.000 0.024 0.976

--------------------------------------------------

From the above summary table, we can observe that the observed LR test statistic is 170.659 and the observed \(\chi^2\) test statistic is 200.624. Their p-values are 0.000, which leads to the rejection of the null hypothesis of conditional spatial independence.

For the last (information theory-based) test, we call the function kullback. The result is consistent with LR and \(\chi^2\) tests. As shown below, the observed test statistic is 230.03 and its p-value is 2.22e-16, leading to the rejection of the null.

[35]:

print(giddy.markov.kullback(sm.T))

{'Conditional homogeneity': 230.02662463753222, 'Conditional homogeneity dof': 80, 'Conditional homogeneity pvalue': 2.220446049250313e-16}

LISA Markov¶

giddy.markov.LISA_Markov(

self, y, w, permutations=0, significance_level=0.05, geoda_quads=False

)

The Spatial Markov conditions the transitions on the value of the spatial lag for an observation at the beginning of the transition period. An alternative approach to spatial dynamics is to consider the joint transitions of an observation and its spatial lag in the distribution. By exploiting the form of the static LISA and embedding it in a dynamic context we develop the LISA Markov in which the states of the chain are defined as the four quadrants in the Moran scatter plot, namely,

HH(=1), LH(=2), LL(=3), HL(=4). Continuing on with our US example, the LISA transitions are:

[36]:

lm = giddy.markov.LISA_Markov(pci.T, w)

print(lm.classes)

[1 2 3 4]

The LISA transitions are:

[37]:

print(lm.transitions)

[[1.087e+03 4.400e+01 4.000e+00 3.400e+01]

[4.100e+01 4.700e+02 3.600e+01 1.000e+00]

[5.000e+00 3.400e+01 1.422e+03 3.900e+01]

[3.000e+01 1.000e+00 4.000e+01 5.520e+02]]

and the estimated transition probability matrix is:

[38]:

print(lm.p)

[[0.92985458 0.03763901 0.00342173 0.02908469]

[0.07481752 0.85766423 0.06569343 0.00182482]

[0.00333333 0.02266667 0.948 0.026 ]

[0.04815409 0.00160514 0.06420546 0.88603531]]

The diagonal elements indicate the staying probabilities and we see that there is greater mobility for observations in quadrants 2 (LH) and 4 (HL) than 1 (HH) and 3 (LL).

The implied long run steady state distribution of the chain is:

[39]:

print(lm.steady_state)

[0.28561505 0.14190226 0.40493672 0.16754598]

again reflecting the dominance of quadrants 1 and 3 (positive autocorrelation). The mean first passage time for the LISAs is:

[40]:

print(giddy.ergodic.mfpt(lm.p))

[[ 3.50121609 37.93025465 40.55772829 43.17412009]

[31.72800152 7.04710419 28.68182751 49.91485137]

[52.44489385 47.42097495 2.46952168 43.75609676]

[38.76794022 51.51755827 26.31568558 5.96851095]]

To test for dependence between the dynamics of the region and its neighbors, we turn to \(\chi^2\) test of independence. Here, the \(\chi^2\) statistic, its p-value and degrees of freedom can be obtained from the attribute chi_2. As the p-value is 0.0, the null of independence is clearly rejected.

[41]:

print(lm.chi_2)

(1058.2079036003051, 0.0, 9)

Next steps¶

Simulation/prediction of Markov chain and spatial Markov chain

References¶

Rey, S. J. 2001. “Spatial Empirics for Economic Growth and Convergence.” Geographical Analysis 33 (3). Wiley Online Library: 195–214.

Bickenbach, F., and E. Bode. 2003. “Evaluating the Markov Property in Studies of Economic Convergence.” International Regional Science Review 26 (3): 363–92.

Kullback, S., M. Kupperman, and H. H. Ku. 1962. “Tests for Contingency Tables and Markov Chains.” Technometrics: A Journal of Statistics for the Physical, Chemical, and Engineering Sciences 4 (4). JSTOR: 573–608.